Top Tavily Alternatives: Best Options Compared

Introduction

In the fast-changing world of AI-powered search and web scraping tools, Tavily has become a popular choice for developers and researchers looking for smart web data extraction. However, like any tech solution, it may not suit everyone's needs. Whether you're searching for more affordable options, specific features that Tavily lacks, or just want to explore other options, knowing the available alternatives is essential for making informed decisions.

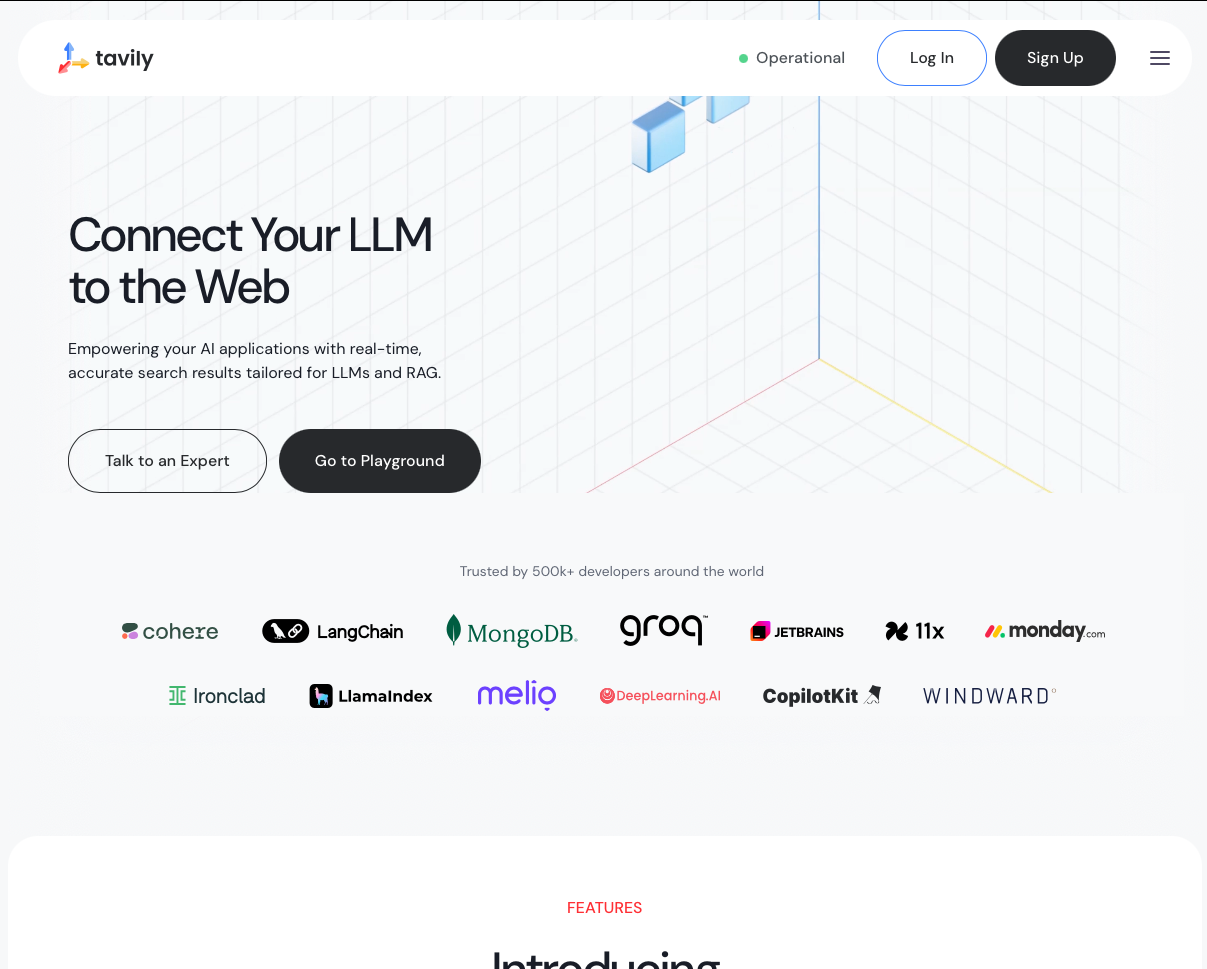

What is Tavily

Tavily is a search engine designed specifically for AI agents and large language models (LLMs), providing fast, real-time, accurate, and factual results. Unlike traditional search engines made for human users, Tavily offers a specialized search API that allows AI applications to efficiently retrieve and process web data, delivering real-time, accurate, and unbiased information tailored for AI-driven solutions. The platform stands out by offering high rate limits, precise results, and relevant content snippets optimized for AI processing. This makes it an essential tool for developers creating AI agents, chatbots, and retrieval-augmented generation (RAG) systems. With features like natural language query support, advanced filtering, contextual search, and real-time updates, Tavily acts as a gateway to smarter data management, changing how AI systems access and interact with web-based information. The API has gained significant popularity in the AI community, with official integrations with popular frameworks like LangChain and endorsements from leading AI companies that rely on Tavily to power their enterprise AI solutions and research capabilities.

How to use Tavily

def tavily_search(query, api_key="tvly-xxxxxxxxxxxxxxxxxxxxx", **kwargs):

"""

Perform a search using the Tavily API

Args:

query (str): The search query

api_key (str): Tavily API key (default provided)

**kwargs: Additional parameters for the search (e.g., max_results, include_images, etc.)

Returns:

dict: Search results from the Tavily API

"""

try:

# To install: pip install tavily-python

from tavily import TavilyClient

client = TavilyClient(api_key)

response = client.search(query=query, **kwargs)

return response

except ImportError:

print("Error: tavily-python package not installed. Run: pip install tavily-python")

return None

except Exception as e:

print(f"Error performing Tavily search: {e}")

return None

# Example usage:

if __name__ == "__main__":

# Basic search

result = tavily_search("What is artificial intelligence?")

if result:

print(result)

# Search with additional parameters

result = tavily_search(

query="latest AI news",

max_results=5,

include_images=True

)

if result:

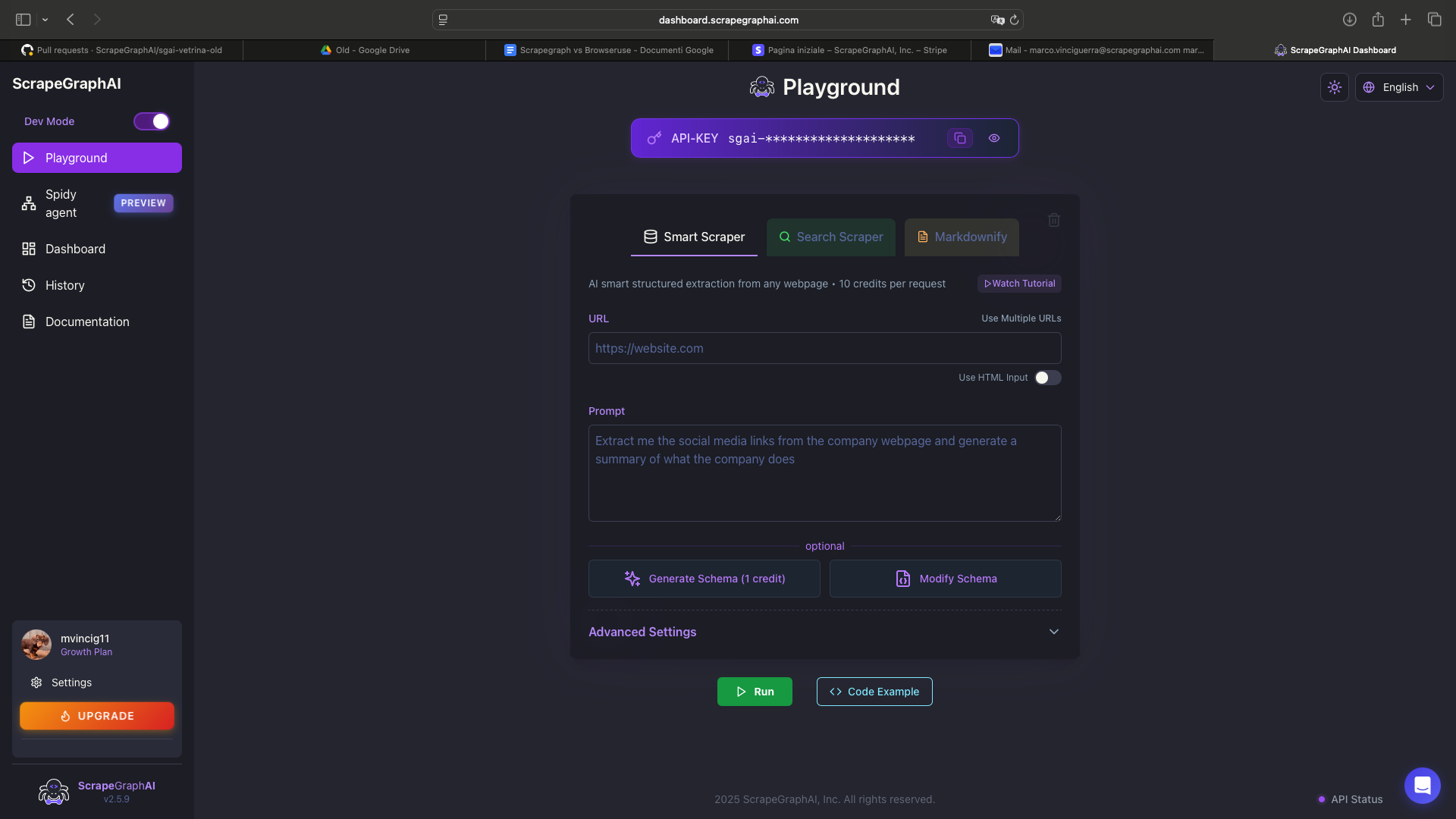

print(result)What is ScrapeGraphAI

ScrapeGraphAI is an API that uses AI to extract data from the web. It uses graph-based scraping, which is better than both browser-based methods and Tavily. This service fits smoothly into your data pipeline with our easy-to-use APIs, known for their speed and accuracy. Powered by AI, it connects effortlessly with any no-code platform like n8n, Bubble, or Make. We offer fast, production-ready APIs, Python and JS SDKs, auto-recovery, agent-friendly integration (such as LangGraph, LangChain, etc.), and a free tier with strong support.

How to implement the search with ScrapeGraphAI

To implement search with ScrapeGraphAI, you can use the API to extract data from the web. Here are two examples: one without using a schema and one with a schema.

Example 1: Without Schema

from scrapegraph_py import Client

client = Client(api_key="your-scrapegraph-api-key-here")

response = client.searchscraper(

user_prompt="Find information about iPhone 15 Pro"

)

print(f"Product: {response['name']}")

print(f"Price: {response['price']}")

print("\nFeatures:")

for feature in response['features']:

print(f"- {feature}")In this example, the response is directly accessed using dictionary keys without any predefined schema. This approach is flexible but may require additional handling to ensure data consistency.

Example 2: With Schema

from pydantic import BaseModel, Field

from typing import List

from scrapegraph_py import Client

client = Client(api_key="your-scrapegraph-api-key-here")

class ProductInfo(BaseModel):

name: str = Field(description="Product name")

description: str = Field(description="Product description")

price: str = Field(description="Product price")

features: List[str] = Field(description="List of key features")

availability: str = Field(description="Availability information")

response = client.searchscraper(

user_prompt="Find information about iPhone 15 Pro",

output_schema=ProductInfo

)

print(f"Product: {response.name}")

print(f"Price: {response.price}")

print("\nFeatures:")

for feature in response.features:

print(f"- {feature}")In this example, a schema is defined using Pydantic's BaseModel, which ensures that the response data adheres to a specific structure. This approach provides more robust data validation and clarity in handling the response.

Using Vanilla Python

This method serves as a cost-effective alternative to the previous examples, as it does not rely on any additional services or external libraries beyond standard Python and the BeautifulSoup library. By utilizing vanilla Python, you can directly parse and extract data from HTML content without the need for predefined schemas or API calls. This approach is particularly useful for developers who prefer to have complete control over the data extraction process and are comfortable handling the intricacies of HTML parsing. With BeautifulSoup, you can navigate the HTML structure, identify the elements you need, and extract the desired information efficiently. This method is ideal for those who want to avoid the overhead of additional dependencies and prefer a more hands-on approach to web scraping.

import requests

from bs4 import BeautifulSoup

import time

import random

from urllib.parse import urljoin, urlparse

def search_google(query, num_results=3):

"""

Search Google and return the first few result URLs

"""

# Format the search query for Google

search_url = f"https://www.google.com/search?q={query.replace(' ', '+')}"

# Headers to mimic a real browser

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

try:

# Make the request

response = requests.get(search_url, headers=headers)

response.raise_for_status()

# Parse the HTML

soup = BeautifulSoup(response.content, 'html.parser')

# Find search result links

links = []

# Google search results are typically in <a> tags with specific attributes

for link in soup.find_all('a', href=True):

href = link['href']

# Filter out Google's internal links and ads

if href.startswith('/url?q='):

# Extract the actual URL

actual_url = href.split('/url?q=')[1].split('&')[0]

if actual_url.startswith('http') and 'google.com' not in actual_url:

links.append(actual_url)

if len(links) >= num_results:

break

return links

except requests.RequestException as e:

print(f"Error searching Google: {e}")

return []

def scrape_webpage(url):

"""

Scrape content from a given URL

"""

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

try:

response = requests.get(url, headers=headers, timeout=10)

response.raise_for_status()

soup = BeautifulSoup(response.content, 'html.parser')

# Extract title

title = soup.find('title')

title_text = title.get_text().strip() if title else "No title found"

# Extract main content (remove script and style elements)

for script in soup(["script", "style"]):

script.decompose()

# Get text content

text = soup.get_text()

# Clean up the text

lines = (line.strip() for line in text.splitlines())

chunks = (phrase.strip() for line in lines for phrase in line.split(" "))

text = ' '.join(chunk for chunk in chunks if chunk)

# Limit text length for readability

if len(text) > 1000:

text = text[:1000] + "..."

return {

'url': url,

'title': title_text,

'content': text

}

except requests.RequestException as e:

return {

'url': url,

'title': f"Error: {e}",

'content': f"Failed to scrape content: {e}"

}

def main():

# Get search query from user

query = input("Enter your search query: ")

print(f"\nSearching Google for: '{query}'")

print("-" * 50)

# Search Google

search_results = search_google(query, num_results=3)

if not search_results:

print("No search results found.")

return

print(f"Found {len(search_results)} results. Scraping content...\n")

# Scrape each result

for i, url in enumerate(search_results, 1):

print(f"Result {i}: {url}")

# Add a small delay to be respectful to servers

time.sleep(random.uniform(1, 3))

# Scrape the webpage

result = scrape_webpage(url)

print(f"Title: {result['title']}")

print(f"Content preview: {result['content'][:200]}...")

print("-" * 50)

# Alternative function to use a more reliable search API approach

def search_with_duckduckgo(query, num_results=3):

"""

Alternative search using DuckDuckGo (more reliable than scraping Google)

"""

try:

from duckduckgo_search import DDGS

with DDGS() as ddgs:

results = list(ddgs.text(query, max_results=num_results))

return [result['href'] for result in results]

except ImportError:

print("DuckDuckGo search requires: pip install duckduckgo-search")

return []

except Exception as e:

print(f"Error with DuckDuckGo search: {e}")

return []

# Example usage with error handling

if __name__ == "__main__":

try:

main()

except KeyboardInterrupt:

print("\nSearch interrupted by user.")

except Exception as e:

print(f"An error occurred: {e}")Conclusions

The world of AI-powered web data extraction and search tools provides a variety of solutions for different needs, each with its own strengths and best use cases. After looking into Tavily, ScrapeGraphAI, and basic Python methods, several important insights can help guide your technology decisions.

The Strategic Perspective:

Instead of seeing these tools as competing options, innovative organizations should recognize how they can work together to form a cohesive data strategy. In a modern AI-powered data pipeline, Tavily can be utilized for its ability to quickly discover and gather information from a wide range of sources, providing valuable market intelligence. ScrapeGraphAI can then take over for the detailed extraction and processing of structured data, ensuring accuracy and scalability in production environments. Meanwhile, custom Python scripts can be employed for handling unique or specialized scenarios that require tailored solutions. By integrating these tools, organizations can create a robust and flexible data strategy that leverages the strengths of each tool for optimal results.

Looking Forward:

As artificial intelligence continues to revolutionize the way we interact with web data, the most effective strategy is not about selecting a single tool, but rather about constructing intelligent systems that utilize the appropriate technology for each specific task. This approach is particularly important whether you are developing the next generation of AI agents, enhancing business intelligence platforms, or creating cutting-edge data products. A deep understanding of the unique capabilities of these tools will be essential for achieving success in the AI-driven future of web data extraction.

The decision on which tool to use ultimately hinges on your specific requirements. If you need an intelligent search capability, Tavily is an excellent choice due to its ability to quickly discover and gather information from a wide array of sources, providing valuable market intelligence. For scenarios that require production-grade data extraction and processing, ScrapeGraphAI stands out with its detailed extraction and scalability, ensuring accuracy in production environments. On the other hand, if you require maximum control and customization, vanilla Python is ideal, allowing you to handle unique or specialized scenarios with tailored solutions.

However, it is important to remember that the most powerful solutions often arise from combining the best features of all available tools. By integrating Tavily, ScrapeGraphAI, and custom Python scripts, organizations can develop a robust and flexible data strategy that leverages the strengths of each tool, resulting in optimal outcomes. This integrated approach not only enhances the efficiency and effectiveness of data extraction processes but also positions organizations to thrive in the rapidly evolving landscape of AI and web data interaction.

Frequently Asked Question (FAQ)

What is ScrapeGraphAI, and how does it differ from Tavily?

ScrapeGraphAI is an AI-powered web scraping platform that uses graphs to map websites, making it easy to extract structured data from any web page or document. It provides fast, ready-to-use APIs for seamless integration into data extraction workflows. Tavily, on the other hand, is a search engine API designed for AI agents. It focuses on retrieving search results and content snippets from the web but does not offer the detailed scraping capabilities needed to extract specific data elements from individual web pages.

Why should I choose ScrapeGraphAI over Tavily for data extraction needs?

ScrapeGraphAI provides several key advantages for comprehensive data extraction: lightning-fast scraping with graph-based navigation, production-ready stability with auto-recovery mechanisms, the ability to extract structured data from any website layout, simple APIs and SDKs for Python and JavaScript, a generous free tier for testing, dedicated support, and seamless integration with existing data pipelines. While Tavily excels at search and content retrieval, it's primarily designed for finding information rather than performing detailed data extraction from specific web pages or handling complex scraping workflows.

Is ScrapeGraphAI suitable for users who need more than just search functionality?

Yes, ScrapeGraphAI is designed for users who need comprehensive data extraction beyond simple search results. With minimal configuration, it can handle complex scraping tasks like extracting product catalogs, financial data, real estate listings, or any structured information from websites. Unlike Tavily, which focuses on search and content snippets optimized for AI processing, ScrapeGraphAI provides full-scale web scraping capabilities that can navigate dynamic content, handle authentication, and extract data in any desired format or structure.

How reliable is ScrapeGraphAI in production environments?

ScrapeGraphAI is production-ready, operating 24/7 with built-in fault tolerance and auto-recovery mechanisms. It is designed to handle edge cases and maintain stability, unlike Browser-Use, which is prone to crashes and not optimized for production.

Can ScrapeGraphAI be integrated with AI agents?

Absolutely. ScrapeGraphAI can be easily defined as a tool in frameworks like LangGraph, enabling AI agents to leverage its world-class scraping capabilities. The provided code example demonstrates how to integrate it with minimal effort.

Related Resources

Want to learn more about web scraping and alternative tools? Check out these in-depth guides:

- Web Scraping 101 - Master the basics of web scraping

- AI Agent Web Scraping - Discover how AI can revolutionize your scraping workflow

- Mastering ScrapeGraphAI - Learn everything about ScrapeGraphAI

- Scraping with Python - Python web scraping tutorials and best practices

- Scraping with JavaScript - JavaScript-based web scraping techniques

- Web Scraping Legality - Understand the legal implications of web scraping

- Browser Automation vs Graph Scraping - Compare different scraping methodologies

- Pre-AI to Post-AI Scraping - See how AI has transformed web scraping

- ScrapeGraphAI vs Reworkd AI - Compare top AI-powered scraping tools

- LlamaIndex Integration - Learn how to enhance your scraping with LlamaIndex

These resources will help you explore different scraping approaches and find the best tools for your needs.