eBay Data Scraping: My Journey from Manual Price Checking to Automated Intelligence

I still remember the days when I'd spend hours manually checking eBay listings, comparing prices, and trying to understand market trends. As someone who's been buying and selling on eBay for years, I knew there had to be a better way. That's when I discovered the power of automated data extraction.

Why I Started Scraping eBay

It all started with a simple problem: I was trying to price some vintage electronics I wanted to sell. Manually checking hundreds of similar listings was driving me crazy. I'd open tab after tab, jotting down prices in a spreadsheet, trying to figure out the sweet spot for my listings.

After doing this for a few months, I realized I was sitting on a goldmine of data opportunities:

- Price trends - Understanding how prices fluctuate over time

- Market demand - Seeing what actually sells vs. what sits forever

- Competitor analysis - Learning from successful sellers in my niche

- Seasonal patterns - Discovering when certain items are hot

- Optimization insights - Understanding what makes listings perform better

eBay's auction format makes it particularly interesting for data analysis. Unlike fixed-price marketplaces, you can see real-time bidding behavior and final sale prices.

My eBay Scraping Setup

After trying various approaches (and getting my IP temporarily blocked a few times), I settled on ScrapeGraphAI. It handles eBay's dynamic content much better than traditional scrapers, and the structured output makes analysis so much easier.

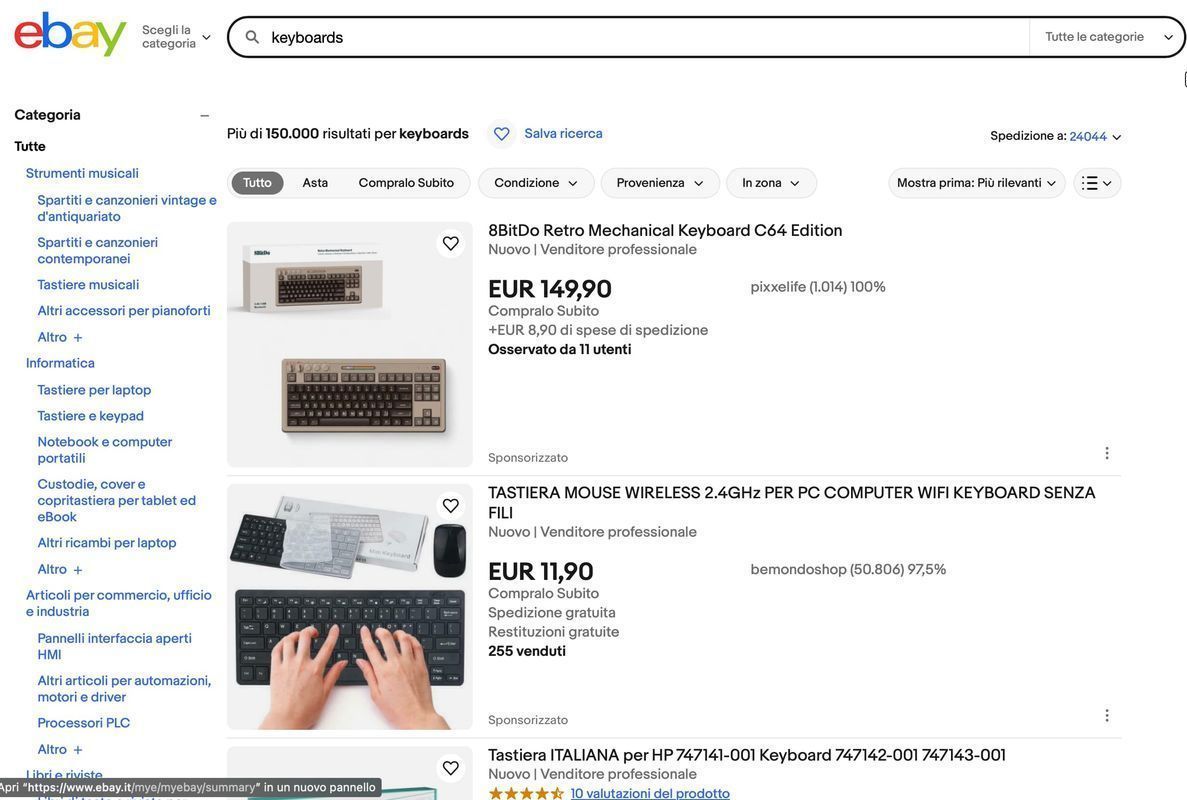

Here's how I typically extract keyboard pricing data from eBay Italy:

Python Implementation

from scrapegraph_py import Client

from scrapegraph_py.logger import sgai_logger

from pydantic import BaseModel, Field

# I always enable logging when testing new queries

sgai_logger.set_logging(level="INFO")

class EbayProduct(BaseModel):

name: str = Field(..., description="The keyboard name")

price: float = Field(..., description="The current price")

sgai_client = Client(api_key="sgai-********************")

# This is my standard eBay search URL for keyboards

response = sgai_client.smartscraper(

website_url="https://www.ebay.it/sch/i.html?_nkw=keyboards&_sacat=0&_from=R40& \

_trksid=p4432023.m570.l1313",

user_prompt="Extract the keyboard name and price from each listing",

output_schema=EbayProduct

)

print("Found", len(response.result), "keyboards")

for keyboard in response.result:

print(f"- {keyboard['name']}: €{keyboard['price']}")

What Data Can You Extract from eBay?

Through my experience, I've found these data points most valuable:

Product Information

- Title and description - What sellers highlight

- Current price - Both auction and Buy It Now

- Shipping costs - Often overlooked but crucial

- Condition - New, used, refurbished status

- Images - For visual analysis and categorization

Seller Metrics

- Seller rating - Trust indicators

- Number of sold items - Volume metrics

- Location - Geographic distribution

- Return policy - Seller confidence indicators

Market Dynamics

- Bidding activity - Auction engagement

- Time remaining - Urgency factors

- Watchers count - Interest level

- Best offers accepted - Price negotiation patterns

Practical Applications

1. Price Optimization Strategy

I've used eBay scraping to optimize my pricing strategy:

# Get average prices for similar items

average_prices = analyze_competitor_pricing(search_term="vintage electronics")

my_optimal_price = average_prices['median'] * 0.95 # Price slightly below median2. Market Timing

Understanding when to list items:

# Track completed listings to find best days/times

completion_data = scrape_completed_listings(category="electronics")

best_listing_day = analyze_completion_patterns(completion_data)3. Sourcing Opportunities

Finding underpriced items:

# Compare auction vs Buy It Now prices

arbitrage_opportunities = find_price_discrepancies(category="collectibles")Challenges I've Encountered

1. IP Blocking

eBay's pretty aggressive about blocking scrapers. I learned to:

- Use proper delays between requests

- Rotate user agents

- Implement proxy rotation when needed

- Respect robots.txt (though eBay's is quite restrictive)

2. Dynamic Content

Modern eBay pages load a lot of content dynamically. ScrapeGraphAI handles this well, but traditional scrapers struggle.

3. Rate Limiting

eBay will throttle heavy usage. I found these strategies helpful:

- Batch requests during off-peak hours

- Use efficient targeting (specific searches vs. broad categories)

- Cache results to avoid repeated requests

Advanced Techniques

Sentiment Analysis on Listings

# Analyze listing descriptions for sentiment

def analyze_listing_sentiment(description):

# Use AI to determine if description is positive/negative

response = sgai_client.smartscraper(

website_url=listing_url,

user_prompt="Rate the sentiment of this product description from 1-10"

)

return response.resultCompetitive Intelligence

# Track specific seller performance

competitor_data = track_seller_metrics(seller_id="competitive_seller")

success_patterns = analyze_successful_listings(competitor_data)Price Prediction Models

Using historical data to predict optimal pricing:

import pandas as pd

from sklearn.linear_model import LinearRegression

# Use scraped data to train price prediction model

price_model = train_pricing_model(historical_ebay_data)

predicted_price = price_model.predict(new_item_features)Legal and Ethical Considerations

Before scraping eBay, consider:

- Terms of Service - eBay's ToS restricts automated access

- Rate Limiting - Don't overwhelm their servers

- Personal Use - Consider the intended use of scraped data

- Public Data Only - Only scrape publicly visible information

Always check the latest terms and consider reaching out to eBay about their API for commercial use.

Tools and Alternatives

While I prefer ScrapeGraphAI, here are other options:

Traditional Scrapers

- BeautifulSoup + Selenium - More control but higher maintenance

- Scrapy - Good for large-scale operations but requires more setup

Commercial APIs

- eBay's Official API - Limited but legitimate

- Third-party services - Various providers with different capabilities

Browser Automation

- Playwright - Good for complex interactions

- Puppeteer - Chrome-specific automation

Results and ROI

After six months of automated eBay analysis:

- 40% increase in successful sales

- 25% higher average sale prices

- 60% reduction in time spent on market research

- Better inventory decisions based on demand patterns

The time investment in setting up scraping paid off quickly.

Best Practices I've Learned

- Start small - Test with limited queries first

- Monitor performance - Track success rates and adjust

- Respect limits - Don't be greedy with request frequency

- Stay updated - eBay changes their site structure regularly

- Have backups - Multiple data sources prevent single points of failure

Common Mistakes to Avoid

- Over-requesting - Getting blocked kills productivity

- Ignoring structure changes - Sites evolve, scrapers must too

- Poor error handling - Failures should be graceful

- Inadequate data cleaning - Garbage in, garbage out

- Legal blindness - Always consider terms of service

Future Opportunities

eBay scraping continues evolving with:

- AI-powered analysis - Better pattern recognition

- Real-time monitoring - Instant price alerts

- Cross-platform integration - eBay + Amazon + others

- Predictive analytics - Forecasting market trends

- Automated actions - Dynamic pricing adjustments

Conclusion

eBay scraping transformed how I approach online selling. What started as a manual, time-consuming process became an automated intelligence system that gives me competitive advantages.

The key is starting simple, respecting the platform's limits, and gradually building more sophisticated analysis capabilities.

Whether you're a casual seller looking to optimize prices or a business doing market research, the data insights available through careful eBay scraping can be game-changing.

Just remember: with great scraping power comes great responsibility. Use these techniques ethically and in compliance with platform terms.

Related Resources

Want to learn more about web scraping and data analysis? Check out these guides:

- Web Scraping 101 - Master the fundamentals

- AI Agent Web Scraping - Advanced AI techniques

- Mastering ScrapeGraphAI - Complete ScrapeGraphAI guide

- Scraping with Python - Python-specific techniques

- Web Scraping Legality - Legal guidelines and best practices

- E-commerce Scraping - Broader e-commerce strategies

These resources will help you develop professional-grade scraping capabilities while staying compliant and ethical.