In today's data-driven world, businesses need to process massive amounts of information to stay competitive. Traditional web scraping methods often fall short when dealing with large-scale data collection, facing challenges like IP blocks, CAPTCHAs, and complex website structures. This is where AI-powered scraping tools come in, revolutionizing how we collect and process web data at scale.

In this comprehensive guide, we'll explore the top AI scrapers that can handle massive data collection tasks efficiently. Whether you're a developer looking for powerful APIs, a business analyst needing market intelligence, or a data scientist working on large datasets, you'll find the perfect tool for your needs.

Best Overall: ScrapeGraphAI

Starts at $19/month for 5,000 credits and 30 requests/minute.1 Offers AI-powered, prompt-based data extraction, ideal for developers seeking intelligent, adaptable scraping with 5 agent runs/day.2

Best Value: Apify

Free plan provides $5 usage and 25 concurrent runs.3 Paid plans start at $35/month.4 A versatile platform with 3,000+ pre-built Actors, offering flexible and scalable data collection solutions.

Most Featured: Octoparse

Offers a free plan with 10 tasks and 10K data exports/month.5 Paid plans start at $99/month, including 500+ templates, 100 tasks, IP rotation, and up to 6 concurrent cloud processes.

Dealing with huge amounts of data can be a real headache, right?

Traditional ways of getting information often hit roadblocks like tricky websites or getting blocked.

This can slow down your projects and keep you from getting the insights you need.

But what if there was a smarter way?

AI scrapers are here to change the game.

They can handle those tough challenges, making it easier to gather all the data you want.

Ready to see how these smart tools can help you get more done?

Keep reading to discover the 7 Best AI Scrapers for Large-Scale Data!

What are the Best AI Scrapers for Large-Scale Data?

When you need to collect a lot of data, regular scraping can fall short.

Large-scale data extraction requires powerful tools that can handle massive requests, avoid blocks, and provide data efficiently.

We've compiled a list of the top AI scrapers that excel at handling large volumes of web data. Each tool has been thoroughly tested and evaluated based on key criteria including scalability, anti-blocking features, and performance.

1. ScrapeGraphAI

ScrapeGraphAI is excellent for tackling complex and large-scale data needs.

It uses AI to understand website structures better, which means it's good at navigating tricky sites.

If you have a huge e-commerce site with lots of different product pages, ScrapeGraphAI can efficiently map it out and pull the data you need.

It's designed to be smart about how it scrapes, making it suitable for big projects.

Key Benefits

- Intelligent identification of data points.

- Automatic handling of website changes.

- Easy integration with other tools.

- Quick setup in just a few minutes.

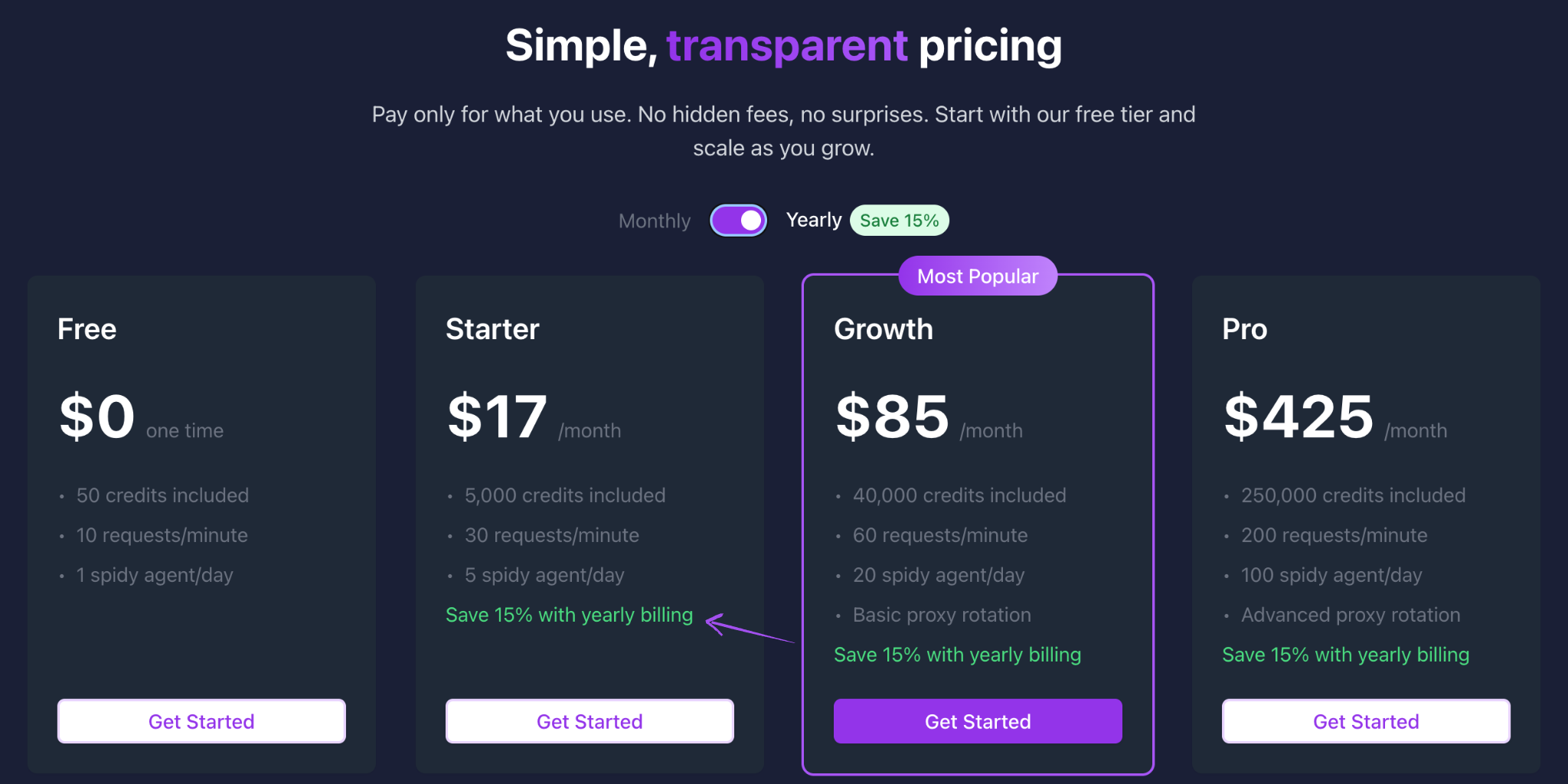

Pricing

- Free: $0/month.

- Starter: $17/month.

- Growth: $85/month.

- Pro: $425/month.

- Enterprise: Custom Pricing

Pros & Cons

Here's what I think about ScrapeGraphAI:

Pros:

- It's super easy to use.

- AI makes it very accurate.

- Handles website changes well.

- Good value for the money.

Cons:

- Fewer integrations than some.

- Advanced features need learning.

Rating

9/10

ScrapeGraphAI is really smart and accurate, which saves a lot of time. The price is fair for what it offers, and the guarantee is a nice touch. It loses a few points because the integrations are a bit limited, and getting the most out of the advanced stuff takes some effort.

2. Apify

Apify is a powerhouse for big scraping jobs. It's not just one tool, but a whole platform.

You can find ready-made "Actors" for common tasks, or build your own custom solutions.

It's super reliable for pulling huge amounts of data.

If you need to scrape millions of product details or track real-time prices across many sites, Apify can handle it.

Key Benefits

- A wide range of pre-built scraping tools (actors).

- Scalable cloud platform for running tasks.

- APIs for custom integrations.

- Options for building your actors.

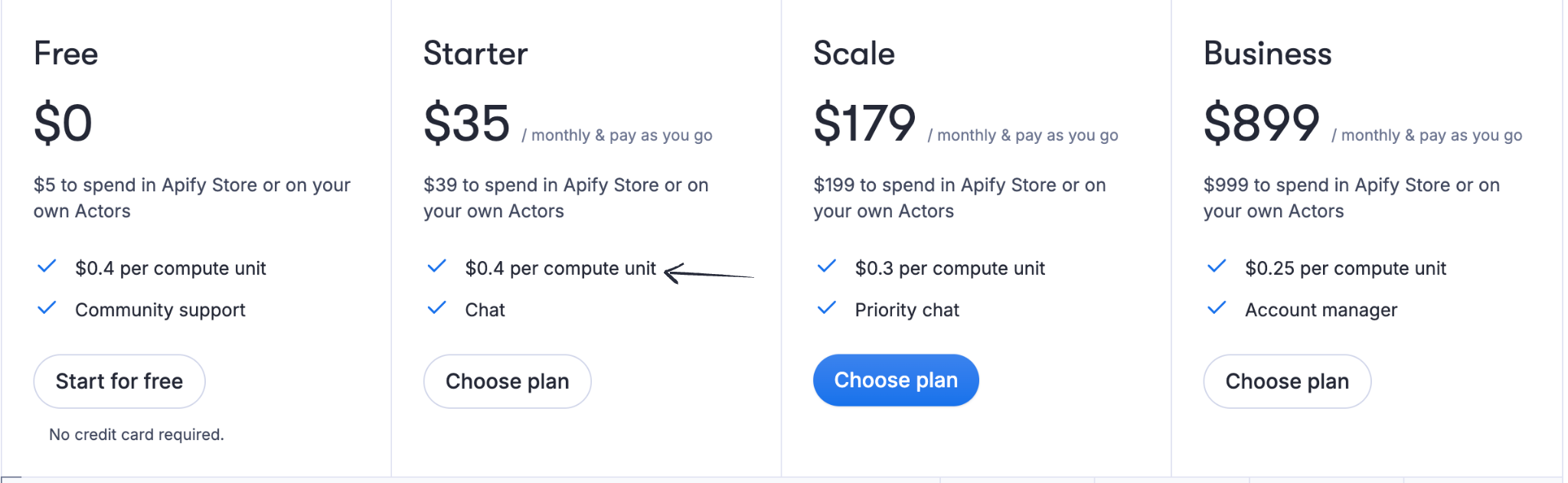

Pricing

- Free: $0/month.

- Starter: $35/month.

- Scale: $179/month.

- Business: $899/month.

Pros & Cons

Here's my take on Apify:

Pros:

- Huge selection of ready-made tools.

- Very scalable cloud infrastructure.

- Good for developers with its APIs.

- The free tier is great for trying things out.

Cons:

- It can be a little bit complex sometimes.

- Costs can add up with heavy usage.

- The quality of actors can vary.

Rating

8.5/10

Apify is a powerful and flexible platform with a lot to offer, especially for developers and those with more complex needs. However, the sheer number of options and the usage-based pricing might be a bit daunting for beginners.

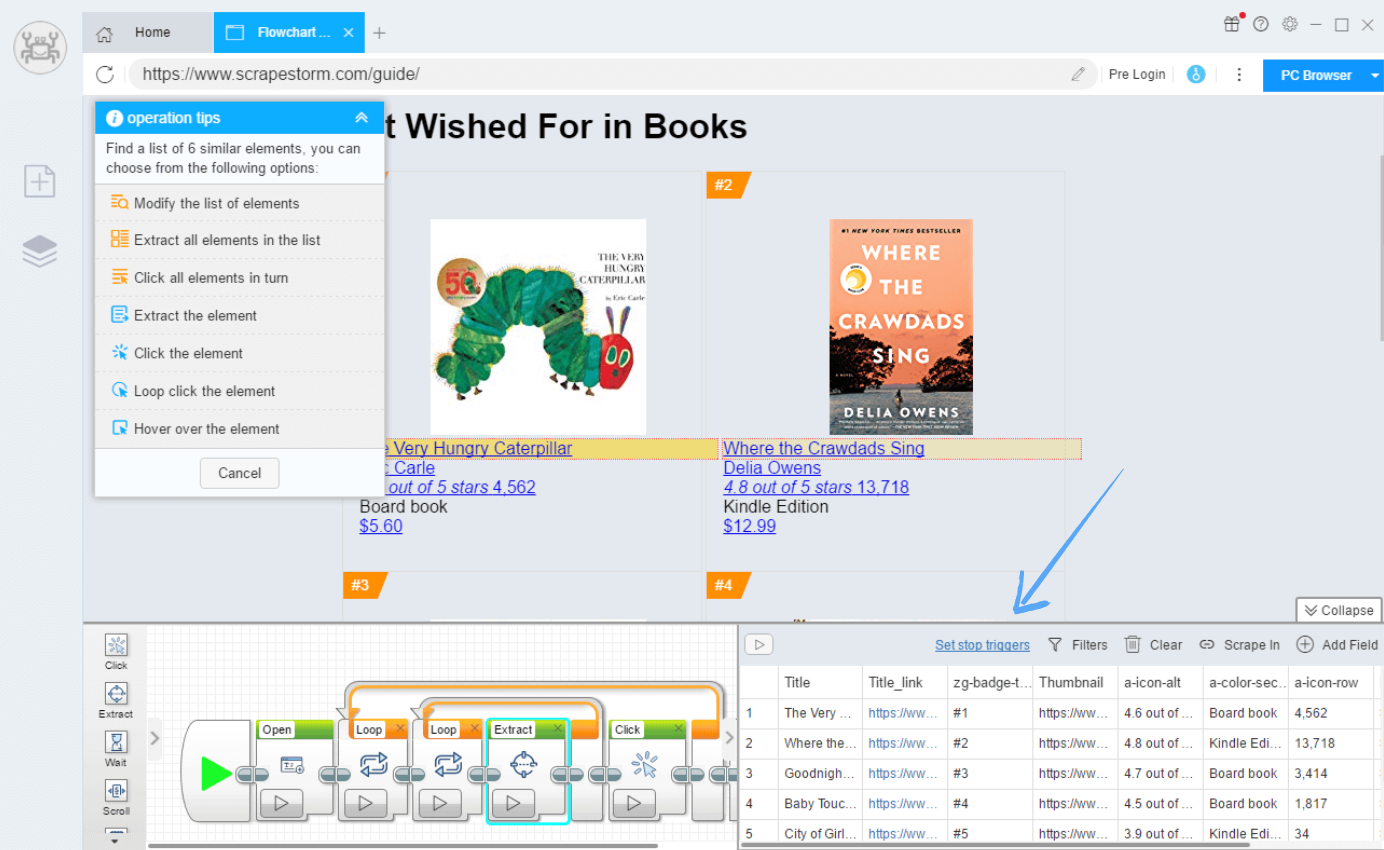

3. Octoparse

Octoparse, while being user-friendly, also offers robust features for larger data sets.

You can set up scheduled cloud runs, which helps in collecting data continuously over time.

This makes it suitable for monitoring changes on many product pages.

Its ability to handle dynamic content means it can scrape from modern e-commerce sites effectively, even at scale.

Key Benefits

- Visual point-and-click interface.

- Cloud-based scraping capabilities.

- Scheduled scraping and automation.

- Ability to handle complex websites.

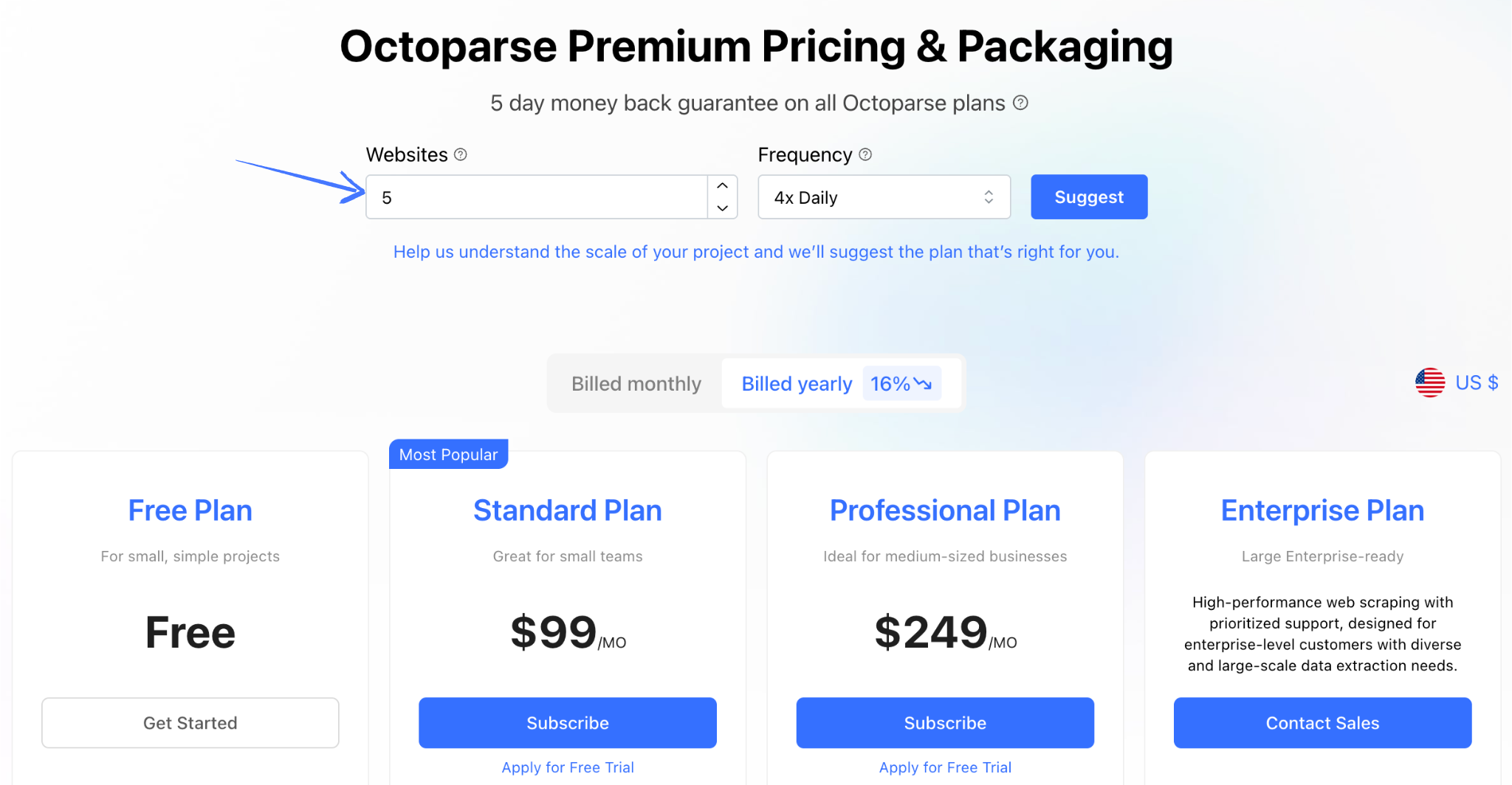

Pricing

- Free: $0/month.

- Standard: $99/month.

- Professional: $249/month.

- Enterprise: Custom Pricing

Pros & Cons

Here's my take on Octoparse:

Pros:

- Very user-friendly interface.

- Great for non-coders like me.

- Cloud scraping is super handy.

- Scheduling is a big time-saver.

Cons:

- It can get pricey for teams.

- Cloud server limits exist.

- Sometimes struggles with very dynamic sites.

Rating

8.0/10

Octoparse is fantastic for people who don't want to code, and the cloud features are a real plus. However, the cost for teams can add up, and it's not always perfect with super complex websites. The lack of a specific warranty is also a minor drawback.

4. ScrapingBee

ScrapingBee is perfect for large-scale data if you're a developer.

It's an API that takes care of all the hard stuff like proxies and browser rendering.

This means you can send it millions of requests without worrying about getting blocked.

It's built for reliability and speed, which are critical when dealing with vast amounts of e-commerce information.

Key Benefits

- Handling of proxies and CAPTCHAs.

- JavaScript rendering for dynamic sites.

- Simple API for easy integration.

- Geolocation targeting for specific data.

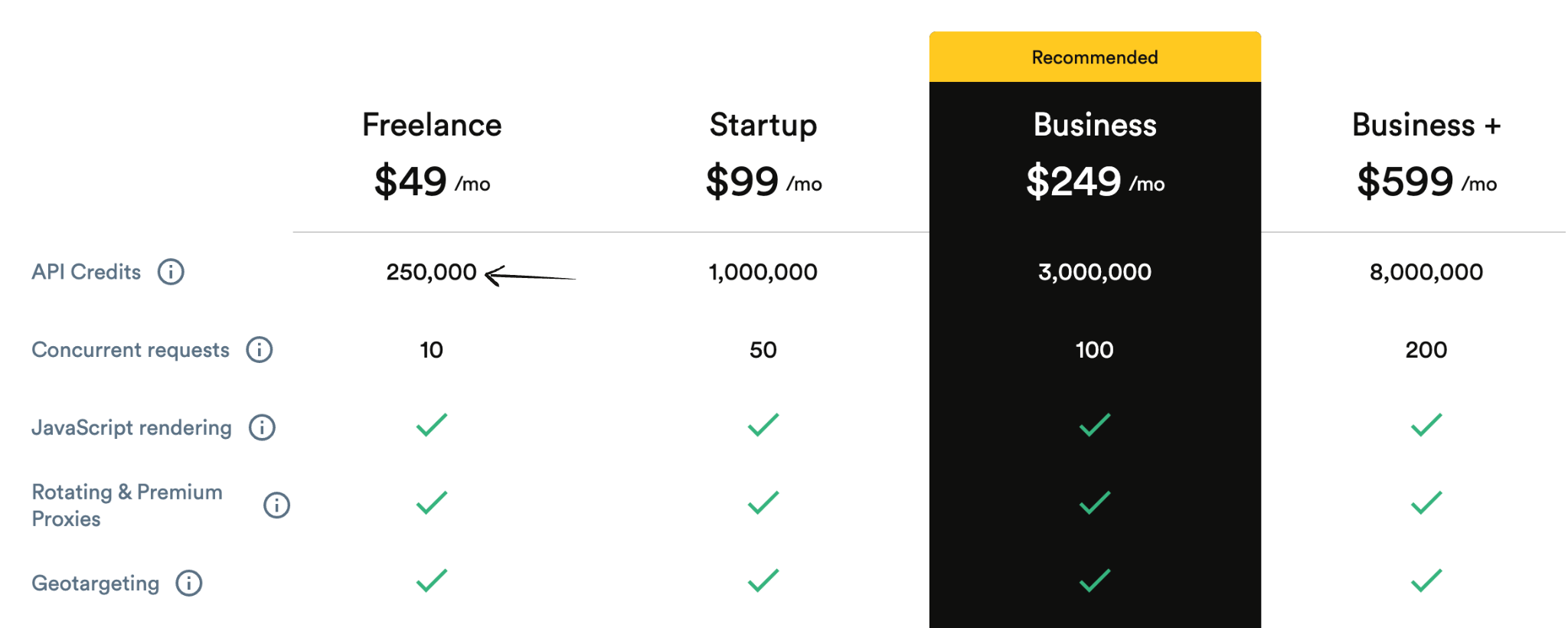

Pricing

- Freelance: $49/month.

- Startup: $99/month.

- Business: $249/month.

- Business+: $599/month.

Pros & Cons

Here's what I think about ScrapingBee:

Pros:

- Great at avoiding blocks and captchas.

- Handles JavaScript websites well.

- Simple API is easy to use.

- Good for reliable data delivery.

Cons:

- Less control over the scraping process.

- It can become costly for large volumes.

- Fewer visual tools for non-coders.

Rating

8/10

ScrapingBee is well known for its fast scraping. If you're tired of getting blocked while scraping, this service is excellent. It handles the technical headaches for you. However, it can get expensive for large-scale needs, and you have less direct control over the scraping logic.

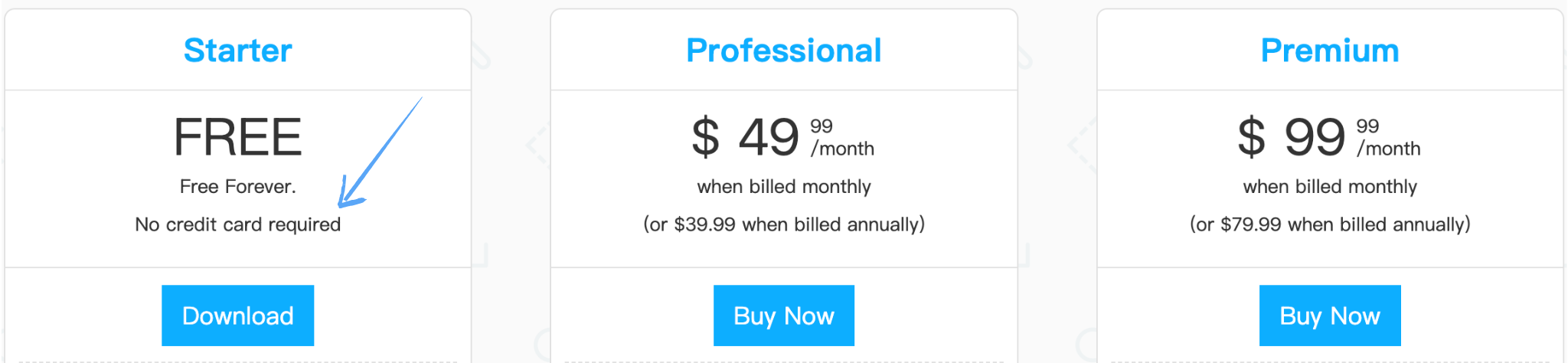

5. ScrapeStorm

ScrapeStorm is another strong contender for big data projects due to its smart recognition features.

It can often detect data patterns automatically, speeding up the setup for large scraping tasks.

Its cloud platform allows for running many scraping jobs simultaneously.

Which is essential when you're trying to collect information from thousands or millions of product listings.

Key Benefits

- Fully automated scraping process.

- Intelligent pattern recognition.

- Support for various data export formats.

- Ability to handle complex website structures.

Pricing

- Free: $0/month.

- Professional: $49.99/month.

- Premium: $99.99/month.

Pros & Cons

Here are my thoughts on ScrapeStorm:

Pros:

- Automation is really impressive.

- It handles complex sites well.

- Lots of tasks on the basic plan.

- Good for users with less experience.

Cons:

- Less control over specific details.

- Advanced customization can be tricky.

- The interface might feel different initially.

Rating

7.5/10

ScrapeStorm's automation is a big plus, especially if you're dealing with many different websites. It's also quite capable of complex structures. However, the trade-off for automation is less fine-grained control, and the interface might take a little getting used to.

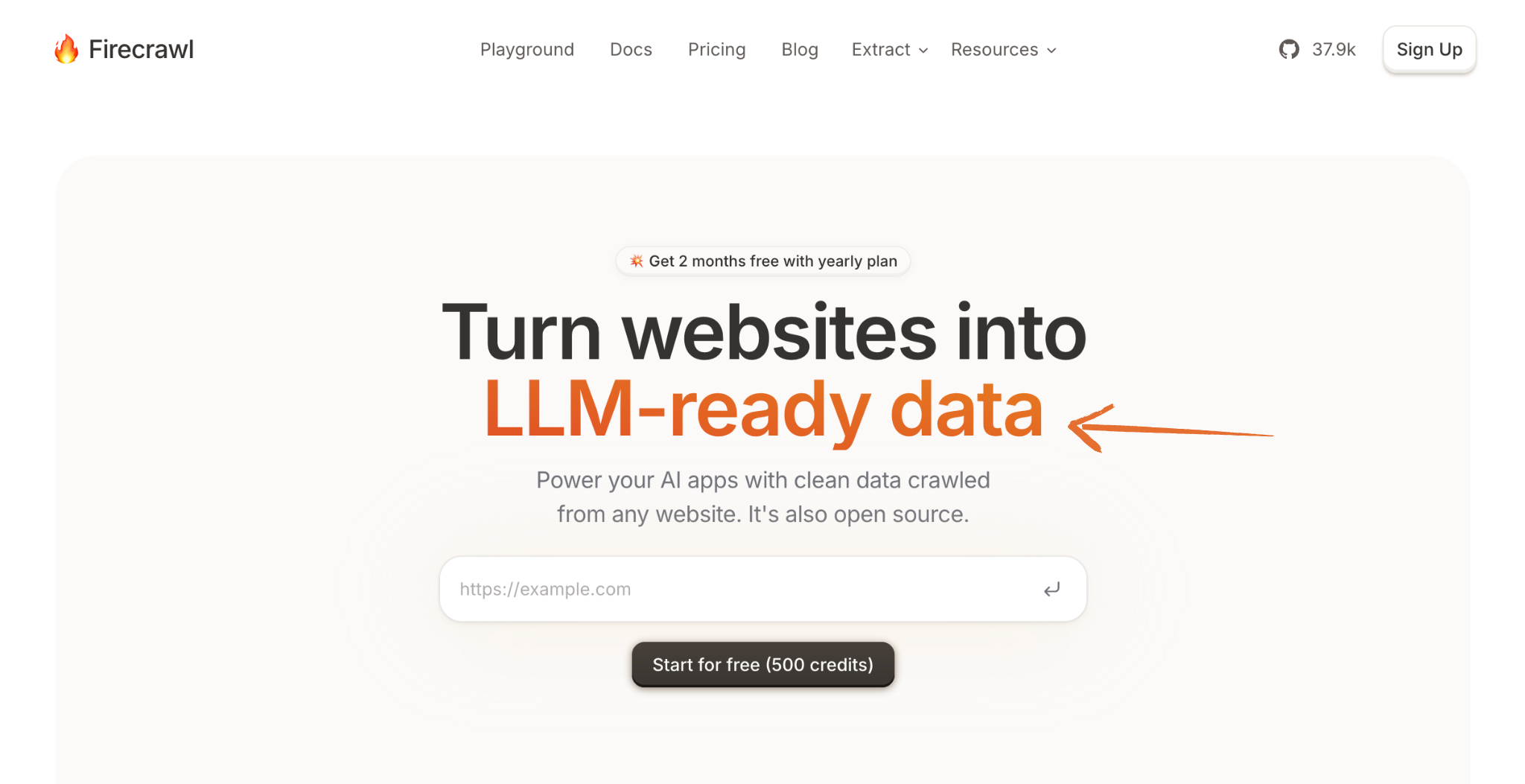

6. Firecrawl

Firecrawl excels at transforming entire websites into structured data, which is crucial for large-scale operations.

Instead of just scraping individual elements, it can process full web pages efficiently.

If you need to quickly ingest vast amounts of content, like product descriptions or articles from multiple e-commerce blogs, Firecrawl can convert it into a clean, usable format at a high volume.

Key Benefits

- High-performance crawling engine.

- Real-time data delivery options.

- Scalable architecture for large projects.

- Integration with popular cloud platforms.

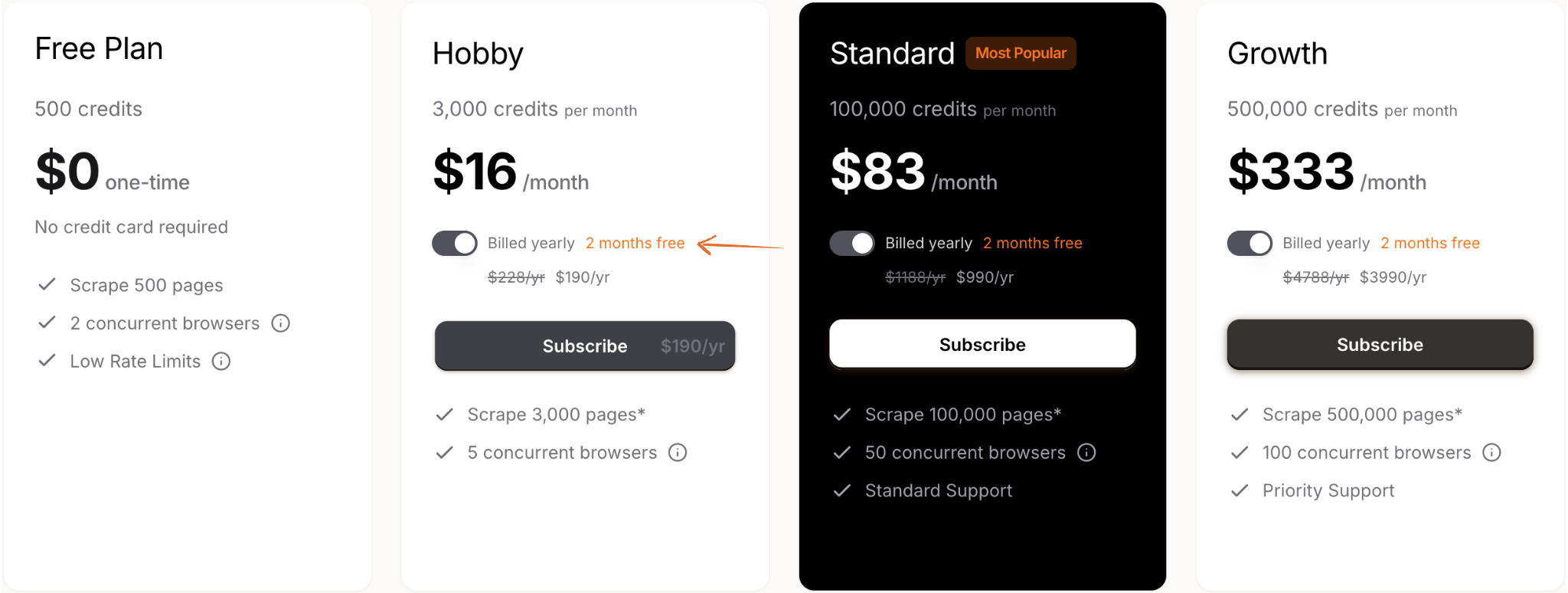

Pricing

- Free: $0/month.

- Hobby: $16/month.

- Standard: $83/month.

- Growth: $333/month.

Pros & Cons

Here's what I think about Firecrawl:

Pros:

- Incredibly fast for big jobs.

- Designed for large-scale scraping.

- Real-time data is very useful.

- Integrates well with cloud services.

Cons:

- It might be overkill for small tasks.

- Pricing can be less transparent upfront.

- Possibly requires more technical knowledge.

Rating

7.5/10

Firecrawl is a strong contender if you need to scrape massive amounts of data quickly. However, it might be too much for smaller projects, and getting clear pricing information can take an extra step.

7. Browse AI

Browse AI is surprisingly powerful for larger jobs despite its simple interface.

You can set up "monitors" that automatically extract data at regular intervals.

This is great for keeping an eye on vast numbers of competitor prices or product availability across many online stores.

Its ease of use combined with its automation capabilities makes it a solid choice for scaling up your data collection efforts.

Key Benefits

- No-code, visual data extraction.

- Ability to monitor website changes.

- Integration with popular automation tools.

- Pre-built robots for common use cases.

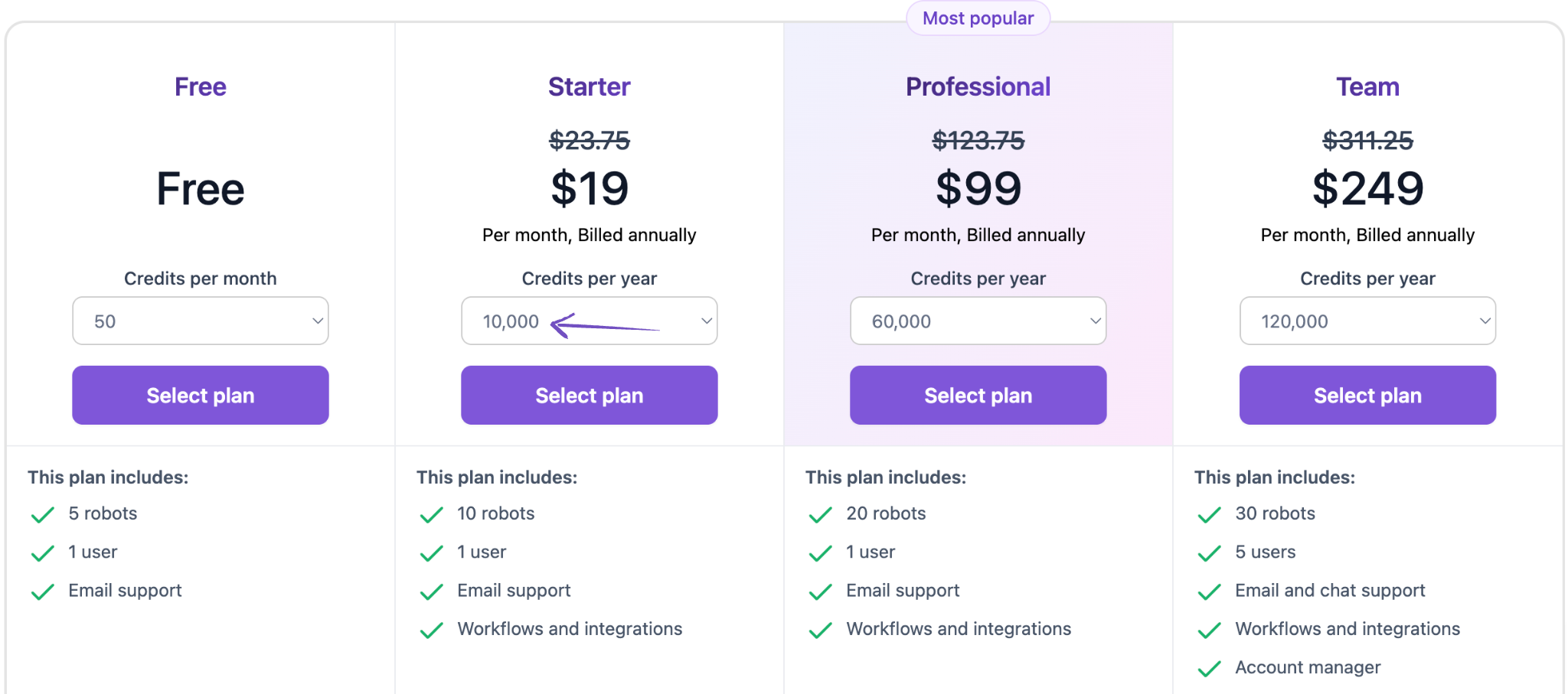

Pricing

- Free: $0/month.

- Starter: $19/month.

- Professional: $99/month.

- Team: $249/month.

Pros & Cons

Here are my thoughts on Browse AI:

Pros:

- Extremely easy to get started.

- No coding knowledge needed at all.

- Monitoring changes is a great feature.

- Integrates with many other tools.

Cons:

- It can become expensive as you scale.

- Might not handle very complex sites.

- Relies heavily on visual recognition.

Rating

7/10

AI's ease of use is a huge advantage, especially for non-technical users. The ability to monitor changes is also very valuable. However, the cost can add up, and it might not be the best for the most intricate website structures.

What to look for when choosing AI Scrapers for Large-Scale Data?

Before diving into our detailed reviews, it's crucial to understand what makes a great AI scraper for large-scale operations. Here are the essential factors to consider:

- Scalability: Can it handle millions of pages without slowing down or crashing? This is key for huge projects.

- Anti-Blocking Features: Does it have strong ways to avoid getting blocked? Look for IP rotation, proxy management, and CAPTCHA solving.

- Dynamic Content Handling: Can it scrape data from websites that use a lot of JavaScript or constantly change?

- Data Quality & Accuracy: Does it ensure the data it pulls is correct and clean? Look for features like data parsing and cleaning.

- Performance: How fast can it extract data? Speed matters a lot for large volumes.

- Reliable Storage: Does it offer good options for storing massive amounts of structured data?

- Automation & Scheduling: Can you set it up to run tasks automatically and at specific times?

How can AI Scrapers for Large-Scale Data change the game?

As we've seen in our detailed tool reviews, AI scrapers for large-scale data are revolutionizing how businesses collect and process information. These tools offer several game-changing advantages:

AI scrapers for large-scale data are a game-changer because they let you see the whole picture.

Instead of just a few products, you can track millions.

This means you get a complete view of the market, not just a small piece.

This power helps businesses make much better decisions.

You can spot big trends faster, understand every competitor's move, and react quickly to market shifts.

It's like having a superpower for market research.

Ultimately, these tools give you a huge advantage.

They turn overwhelming amounts of web data into clear, actionable insights.

This helps businesses win in today's fast-moving digital world.

Wrapping Up

After reviewing the 7 best AI scrapers for large-scale data collection, it's clear that these tools are essential for modern businesses. Whether you choose ScrapeGraphAI for its AI-powered intelligence, Apify for its value, or any other tool from our list, you'll be equipped to handle massive data collection tasks efficiently.

Remember to consider the key factors we discussed when making your choice, and don't hesitate to refer to our FAQ section for additional guidance.

Frequently Asked Questions

What is an AI scraper for large-scale data?

An AI scraper for large-scale data is a tool that uses artificial intelligence to collect massive amounts of information from websites automatically. It's designed for efficiency and handling complex, high-volume data extraction tasks.

Why is AI important for large-scale scraping?

AI helps manage the complexities of large-scale scraping, like bypassing anti-bot measures and adapting to website changes. It makes the process more reliable and efficient for collecting huge datasets.

Can these tools handle dynamic websites?

Yes, most AI scrapers for large-scale data are built to handle dynamic websites that use JavaScript or frequently update their content. They use advanced techniques to ensure accurate data extraction.

How do these tools prevent IP blocking?

They often use features like automatic IP rotation, residential proxies, and smart request management to avoid detection and blocking by websites, ensuring continuous data flow for large projects.

What kind of data can I collect at scale?

You can collect vast amounts of data like product catalogs, pricing information, customer reviews, market trends, news articles, and competitor intelligence from numerous sources across the web.

More Links

Quick Navigation

Tool Reviews

- ScrapeGraphAI - Best Overall AI Scraper

- Apify - Best Value Platform

- Octoparse - Most Featured Solution

- ScrapingBee - Developer's Choice

- ScrapeStorm - Smart Automation

- Firecrawl - High-Performance Crawling

- Browse AI - No-Code Solution